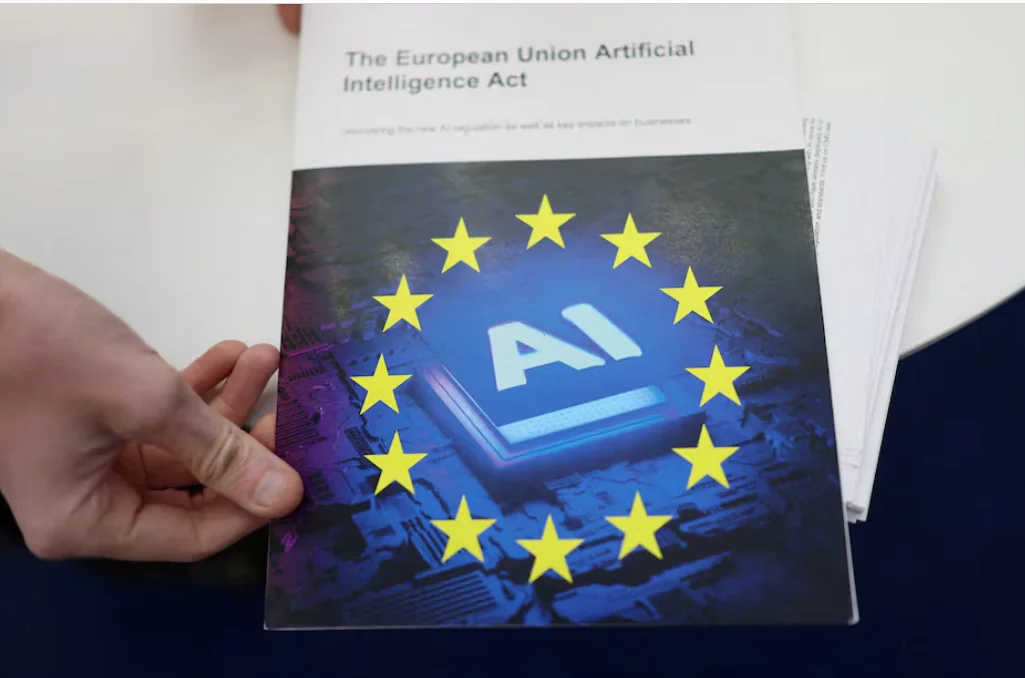

The European Union (EU) has reaffirmed its commitment to implementing the Artificial Intelligence Act (AI Act) on schedule, despite mounting pressure from major tech companies and some member states to delay the rollout of the landmark legislation.

Speaking at a press conference on Friday, European Commission spokesperson Thomas Regnier made it clear that there will be no pause or grace period for the enforcement of the EU’s AI regulation framework. “There is no stopping the clock. There is no grace period. There is no pause,” Regnier stated. “We have legal deadlines established in a legal text.”

AI Act Timeline: Key Deadlines to Know

The AI Act, which came into effect in February 2025, outlines a phased approach to regulation, with key implementation dates as follows:

- August 2025: Obligations begin for general-purpose AI models

- August 2026: Compliance requirements take effect for high-risk AI systems

These rules aim to establish trustworthy AI frameworks across the EU, covering transparency, accountability, and safety, especially in sectors as healthcare, transportation, education, and finance.

Tech Giants and Startups Push for Delay

Several major companies, including Google’s parent company Alphabet, Meta (Facebook owner), and European firms such as Mistral and ASML, have recently urged the Commission to delay enforcement. Their concerns revolve around:

- High compliance costs

- Complex regulatory requirements

- Potential disadvantage for European AI startups competing with firms from the U.S. and China

Despite the pressure, the Commission has decided, signalling a continued emphasis on AI safety and accountability.

Support Measures for SMEs Under Consideration

While maintaining its regulatory timeline, the European Commission is considering simplifying digital compliance rules for small and medium-sized enterprises (SMEs). Planned proposals by year-end may include:

- Reduced reporting obligations

- Streamlined compliance procedures

- Support for innovation without compromising safety

This balanced approach seeks to ensure that the AI Act promotes ethical AI development without stifling European innovation.

Europe’s AI Regulation: A Global First

The EU AI Act is the world’s first comprehensive legal framework for artificial intelligence. It categorizes AI systems based on risk—unacceptable, high, limited, and minimal—and imposes proportionate obligations accordingly.

By enforcing strict standards, the EU aims to:

- Protect fundamental rights

- Promote human-centric AI

- Establish Europe as a global leader in ethical AI governance

The AI Act also reflects the EU’s long-standing commitment to digital regulation, following earlier frameworks like the Digital Services Act (DSA) and the Digital Markets Act (DMA).

What’s Next for Businesses Using AI in the EU?

Businesses operating in or targeting the EU market should begin:

- Auditing AI systems for compliance classification

- Updating documentation and transparency practices

- Preparing for third-party conformity assessments, if applicable

- Staying informed on upcoming guidance documents and technical standards