South Korea’s SK Hynix expects the global market for high-bandwidth memory (HBM) chips—crucial for artificial intelligence- to grow at an annual rate of 30% through 2030, according to senior company executive Choi Joon-yong, head of HBM business planning.

AI Demand Driving HBM Growth

In an exclusive interview with Reuters, Choi highlighted that demand for AI memory chips remains strong and resilient, brushing aside concerns about rising costs in a sector historically treated as a commodity.

“AI demand from the end user is very firm and strong,” Choi said.

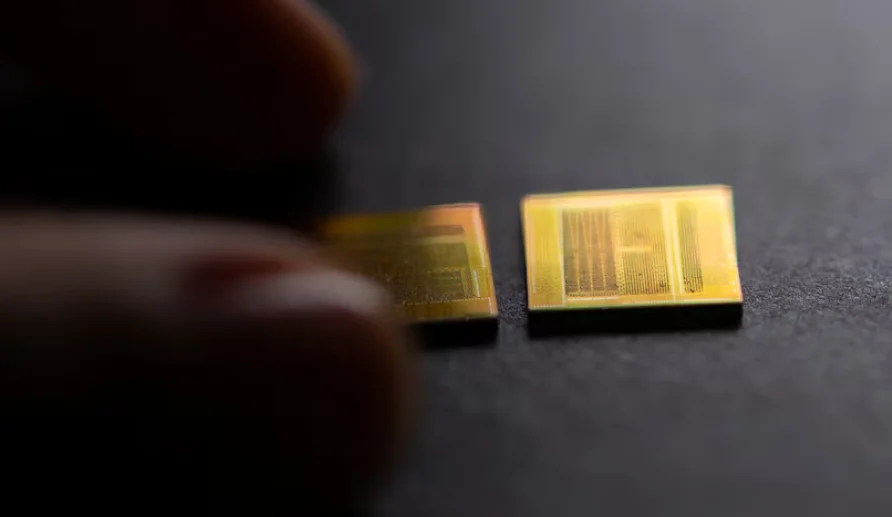

HBM technology, first produced in 2013, stacks memory chips vertically to save space, lower power consumption, and handle massive data loads—a necessity for advanced AI applications.

Tech Giants Fueling the Boom

Major cloud computing firms such as Amazon, Microsoft, and Google are investing billions into AI infrastructure. Choi predicts these spending projections will likely be revised upward, further boosting HBM demand.

The relationship between AI build-outs and HBM purchases is “very straightforward,” Choi noted, with market forecasts remaining conservative to factor in energy supply constraints.

Tens of Billions by 2030

SK Hynix estimates the custom HBM market will be worth tens of billions of dollars by 2030. Competitors such as Micron Technology and Samsung Electronics are also racing to produce next-generation HBM4 chips.

Unlike previous versions, HBM4 incorporates a customer-specific logic die—a base chip that manages the memory—making it harder for buyers to simply swap in a competitor’s product.

A Strategic Shift in the Memory Industry

This move marks a strategic transformation in the memory chip business, shifting away from interchangeable products toward tailor-made, high-performance solutions for AI.